Vision DataModules¶

The following are pre-built datamodules for computer-vision.

Supervised learning¶

These are standard vision datasets with the train, test, val splits pre-generated in DataLoaders with the standard transforms (and Normalization) values

BinaryEMNIST¶

- class pl_bolts.datamodules.binary_emnist_datamodule.BinaryEMNISTDataModule(data_dir=None, split='mnist', val_split=0.2, num_workers=0, normalize=False, batch_size=32, seed=42, shuffle=True, pin_memory=True, drop_last=False, strict_val_split=False, *args, **kwargs)[source]¶

Bases:

pl_bolts.datamodules.emnist_datamodule.EMNISTDataModule

Please see

EMNISTDataModulefor more details.Example:

from pl_bolts.datamodules import BinaryEMNISTDataModule dm = BinaryEMNISTDataModule('.') model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

split¶ (

str) – The dataset has 6 different splits:byclass,bymerge,balanced,letters,digitsandmnist. This argument is passed totorchvision.datasets.EMNIST.val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation split.num_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splits.shuffle¶ (

bool) – IfTrue, shuffles the train data every epoch.pin_memory¶ (

bool) – IfTrue, the data loader will copy Tensors into CUDA pinned memory before returning them.drop_last¶ (

bool) – IfTrue, drops the last incomplete batch.strict_val_split¶ (

bool) – IfTrue, uses the validation split defined in the paper and ignoresval_split. Note that it only works with"balanced","digits","letters","mnist"splits.

BinaryMNIST¶

- class pl_bolts.datamodules.binary_mnist_datamodule.BinaryMNISTDataModule(data_dir=None, val_split=0.2, num_workers=0, normalize=False, batch_size=32, seed=42, shuffle=True, pin_memory=True, drop_last=False, *args, **kwargs)[source]¶

Bases:

pl_bolts.datamodules.vision_datamodule.VisionDataModule

- Specs:

10 classes (1 per digit)

Each image is (1 x 28 x 28)

Binary MNIST, train, val, test splits and transforms

Transforms:

mnist_transforms = transform_lib.Compose([ transform_lib.ToTensor() ])

Example:

from pl_bolts.datamodules import BinaryMNISTDataModule dm = BinaryMNISTDataModule('.') model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation splitnum_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splitsshuffle¶ (

bool) – If true shuffles the train data every epochpin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

CityScapes¶

- class pl_bolts.datamodules.cityscapes_datamodule.CityscapesDataModule(data_dir, quality_mode='fine', target_type='instance', num_workers=0, batch_size=32, seed=42, shuffle=True, pin_memory=True, drop_last=False, train_transforms=None, val_transforms=None, test_transforms=None, target_transforms=None, *args, **kwargs)[source]¶

Bases:

pytorch_lightning.core.datamodule.LightningDataModuleWarning

The feature CityscapesDataModule is currently marked under review. The compatibility with other Lightning projects is not guaranteed and API may change at any time. The API and functionality may change without warning in future releases. More details: https://lightning-bolts.readthedocs.io/en/latest/stability.html

Standard Cityscapes, train, val, test splits and transforms

- Note: You need to have downloaded the Cityscapes dataset first and provide the path to where it is saved.

You can download the dataset here: https://www.cityscapes-dataset.com/

- Specs:

30 classes (road, person, sidewalk, etc…)

(image, target) - image dims: (3 x 1024 x 2048), target dims: (1024 x 2048)

Transforms:

transforms = transform_lib.Compose([ transform_lib.ToTensor(), transform_lib.Normalize( mean=[0.28689554, 0.32513303, 0.28389177], std=[0.18696375, 0.19017339, 0.18720214] ) ])

Example:

from pl_bolts.datamodules import CityscapesDataModule dm = CityscapesDataModule(PATH) model = LitModel() Trainer().fit(model, datamodule=dm)

Or you can set your own transforms

Example:

dm.train_transforms = ... dm.test_transforms = ... dm.val_transforms = ... dm.target_transforms = ...

- Parameters

data_dir¶ (

str) – where to load the data from path, i.e. where directory leftImg8bit and gtFine or gtCoarse are locatedquality_mode¶ (

str) – the quality mode to use, either ‘fine’ or ‘coarse’target_type¶ (

str) – targets to use, either ‘instance’ or ‘semantic’num_workers¶ (

int) – how many workers to use for loading databatch_size¶ (

int) – number of examples per training/eval stepseed¶ (

int) – random seed to be used for train/val/test splitspin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

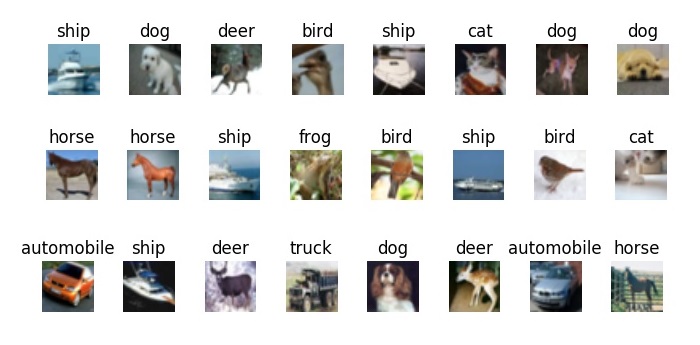

CIFAR-10¶

- class pl_bolts.datamodules.cifar10_datamodule.CIFAR10DataModule(data_dir=None, val_split=0.2, num_workers=0, normalize=False, batch_size=32, seed=42, shuffle=True, pin_memory=True, drop_last=False, *args, **kwargs)[source]¶

Bases:

pl_bolts.datamodules.vision_datamodule.VisionDataModule

- Specs:

10 classes (1 per class)

Each image is (3 x 32 x 32)

Standard CIFAR10, train, val, test splits and transforms

Transforms:

transforms = transform_lib.Compose([ transform_lib.ToTensor(), transforms.Normalize( mean=[x / 255.0 for x in [125.3, 123.0, 113.9]], std=[x / 255.0 for x in [63.0, 62.1, 66.7]] ) ])

Example:

from pl_bolts.datamodules import CIFAR10DataModule dm = CIFAR10DataModule(PATH) model = LitModel() Trainer().fit(model, datamodule=dm)

Or you can set your own transforms

Example:

dm.train_transforms = ... dm.test_transforms = ... dm.val_transforms = ...

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation splitnum_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splitsshuffle¶ (

bool) – If true shuffles the train data every epochpin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

EMNIST¶

- class pl_bolts.datamodules.emnist_datamodule.EMNISTDataModule(data_dir=None, split='mnist', val_split=0.2, num_workers=0, normalize=False, batch_size=32, seed=42, shuffle=True, pin_memory=True, drop_last=False, strict_val_split=False, *args, **kwargs)[source]¶

Bases:

pl_bolts.datamodules.vision_datamodule.VisionDataModule

Dataset information (source: EMNIST: an extension of MNIST to handwritten letters [Table-II])¶ Split Name

No. classes

Train set size

Test set size

Validation set

Total size

"byclass"62

697,932

116,323

No

814,255

"byclass"62

697,932

116,323

No

814,255

"bymerge"47

697,932

116,323

No

814,255

"balanced"47

112,800

18,800

Yes

131,600

"digits"10

240,000

40,000

Yes

280,000

"letters"37

88,800

14,800

Yes

103,600

"mnist"10

60,000

10,000

Yes

70,000

- Parameters

split¶ (

str) – The dataset has 6 different splits:byclass,bymerge,balanced,letters,digitsandmnist. This argument is passed totorchvision.datasets.EMNIST.val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation split.num_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splits.shuffle¶ (

bool) – IfTrue, shuffles the train data every epoch.pin_memory¶ (

bool) – IfTrue, the data loader will copy Tensors into CUDA pinned memory before returning them.drop_last¶ (

bool) – IfTrue, drops the last incomplete batch.strict_val_split¶ (

bool) – IfTrue, uses the validation split defined in the paper and ignoresval_split. Note that it only works with"balanced","digits","letters","mnist"splits.

Here is the default EMNIST, train, val, test-splits and transforms.

Transforms:

emnist_transforms = transform_lib.Compose([ transform_lib.ToTensor(), ])

Example:

from pl_bolts.datamodules import EMNISTDataModule dm = EMNISTDataModule('.') model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation splitnum_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splitsshuffle¶ (

bool) – If true shuffles the train data every epochpin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning themtrain_transforms¶ – transformations you can apply to train dataset

val_transforms¶ – transformations you can apply to validation dataset

test_transforms¶ – transformations you can apply to test dataset

FashionMNIST¶

- class pl_bolts.datamodules.fashion_mnist_datamodule.FashionMNISTDataModule(data_dir=None, val_split=0.2, num_workers=0, normalize=False, batch_size=32, seed=42, shuffle=True, pin_memory=True, drop_last=False, *args, **kwargs)[source]¶

Bases:

pl_bolts.datamodules.vision_datamodule.VisionDataModule

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation split.num_workers¶ (

int) – Number of workers to use for loading data.seed¶ (

int) – Random seed to be used for train/val/test splits.shuffle¶ (

bool) – IfTrue, shuffles the train data every epoch.pin_memory¶ (

bool) – IfTrue, the data loader will copy Tensors into CUDA pinned memory before returning them.drop_last¶ (

bool) – IfTrue, drops the last incomplete batch.

- Specs:

10 classes (1 per type)

Each image is (1 x 28 x 28)

Standard FashionMNIST, train, val, test splits and transforms

Transforms:

mnist_transforms = transform_lib.Compose([ transform_lib.ToTensor() ])

Example:

from pl_bolts.datamodules import FashionMNISTDataModule dm = FashionMNISTDataModule('.') model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation splitnum_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splitsshuffle¶ (

bool) – If true shuffles the train data every epochpin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning themtrain_transforms¶ – transformations you can apply to train dataset

val_transforms¶ – transformations you can apply to validation dataset

test_transforms¶ – transformations you can apply to test dataset

Imagenet¶

- class pl_bolts.datamodules.imagenet_datamodule.ImagenetDataModule(data_dir, meta_dir=None, num_imgs_per_val_class=50, image_size=224, num_workers=0, batch_size=32, shuffle=True, pin_memory=True, drop_last=False, *args, **kwargs)[source]¶

Bases:

pytorch_lightning.core.datamodule.LightningDataModuleWarning

The feature ImagenetDataModule is currently marked under review. The compatibility with other Lightning projects is not guaranteed and API may change at any time. The API and functionality may change without warning in future releases. More details: https://lightning-bolts.readthedocs.io/en/latest/stability.html

- Specs:

1000 classes

Each image is (3 x varies x varies) (here we default to 3 x 224 x 224)

Imagenet train, val and test dataloaders.

The train set is the imagenet train.

The val set is taken from the train set with num_imgs_per_val_class images per class. For example if num_imgs_per_val_class=2 then there will be 2,000 images in the validation set.

The test set is the official imagenet validation set.

Example:

from pl_bolts.datamodules import ImagenetDataModule dm = ImagenetDataModule(IMAGENET_PATH) model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

- prepare_data()[source]¶

This method already assumes you have imagenet2012 downloaded. It validates the data using the meta.bin.

Warning

Please download imagenet on your own first.

- Return type

- train_dataloader()[source]¶

Uses the train split of imagenet2012 and puts away a portion of it for the validation split.

- Return type

- train_transform()[source]¶

The standard imagenet transforms.

transform_lib.Compose([ transform_lib.RandomResizedCrop(self.image_size), transform_lib.RandomHorizontalFlip(), transform_lib.ToTensor(), transform_lib.Normalize( mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225] ), ])

- Return type

- val_dataloader()[source]¶

Uses the part of the train split of imagenet2012 that was not used for training via num_imgs_per_val_class

- Parameters

- Return type

- val_transform()[source]¶

The standard imagenet transforms for validation.

transform_lib.Compose([ transform_lib.Resize(self.image_size + 32), transform_lib.CenterCrop(self.image_size), transform_lib.ToTensor(), transform_lib.Normalize( mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225] ), ])

- Return type

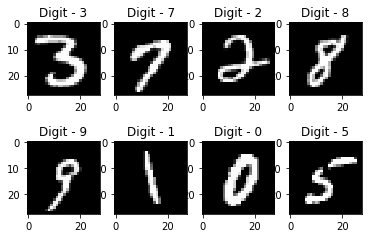

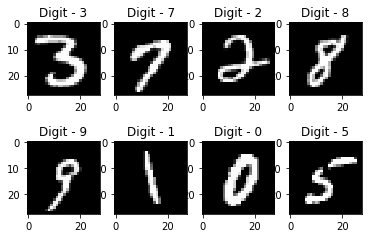

MNIST¶

- class pl_bolts.datamodules.mnist_datamodule.MNISTDataModule(data_dir=None, val_split=0.2, num_workers=0, normalize=False, batch_size=32, seed=42, shuffle=True, pin_memory=True, drop_last=False, *args, **kwargs)[source]¶

Bases:

pl_bolts.datamodules.vision_datamodule.VisionDataModule

- Specs:

10 classes (1 per digit)

Each image is (1 x 28 x 28)

Standard MNIST, train, val, test splits and transforms

Transforms:

mnist_transforms = transform_lib.Compose([ transform_lib.ToTensor() ])

Example:

from pl_bolts.datamodules import MNISTDataModule dm = MNISTDataModule('.') model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation splitnum_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splitsshuffle¶ (

bool) – If true shuffles the train data every epochpin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

Semi-supervised learning¶

The following datasets have support for unlabeled training and semi-supervised learning where only a few examples are labeled.

Imagenet (ssl)¶

- class pl_bolts.datamodules.ssl_imagenet_datamodule.SSLImagenetDataModule(data_dir, meta_dir=None, num_workers=0, batch_size=32, shuffle=True, pin_memory=True, drop_last=False, *args, **kwargs)[source]¶

Bases:

pytorch_lightning.core.datamodule.LightningDataModuleWarning

The feature SSLImagenetDataModule is currently marked under review. The compatibility with other Lightning projects is not guaranteed and API may change at any time. The API and functionality may change without warning in future releases. More details: https://lightning-bolts.readthedocs.io/en/latest/stability.html

- prepare_data_per_node[source]¶

If True, each LOCAL_RANK=0 will call prepare data. Otherwise only NODE_RANK=0, LOCAL_RANK=0 will prepare data.

- allow_zero_length_dataloader_with_multiple_devices[source]¶

If True, dataloader with zero length within local rank is allowed. Default value is False.

- prepare_data()[source]¶

Use this to download and prepare data. Downloading and saving data with multiple processes (distributed settings) will result in corrupted data. Lightning ensures this method is called only within a single process, so you can safely add your downloading logic within.

Warning

DO NOT set state to the model (use

setupinstead) since this is NOT called on every deviceExample:

def prepare_data(self): # good download_data() tokenize() etc() # bad self.split = data_split self.some_state = some_other_state()

In a distributed environment,

prepare_datacan be called in two ways (using prepare_data_per_node)Once per node. This is the default and is only called on LOCAL_RANK=0.

Once in total. Only called on GLOBAL_RANK=0.

Example:

# DEFAULT # called once per node on LOCAL_RANK=0 of that node class LitDataModule(LightningDataModule): def __init__(self): super().__init__() self.prepare_data_per_node = True # call on GLOBAL_RANK=0 (great for shared file systems) class LitDataModule(LightningDataModule): def __init__(self): super().__init__() self.prepare_data_per_node = False

This is called before requesting the dataloaders:

model.prepare_data() initialize_distributed() model.setup(stage) model.train_dataloader() model.val_dataloader() model.test_dataloader() model.predict_dataloader()

- Return type

- test_dataloader(num_images_per_class, add_normalize=False)[source]¶

Implement one or multiple PyTorch DataLoaders for testing.

For data processing use the following pattern:

download in

prepare_data()process and split in

setup()

However, the above are only necessary for distributed processing.

Warning

do not assign state in prepare_data

test()setup()

Note

Lightning adds the correct sampler for distributed and arbitrary hardware. There is no need to set it yourself.

- Return type

- Returns

A

torch.utils.data.DataLoaderor a sequence of them specifying testing samples.

Example:

def test_dataloader(self): transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (1.0,))]) dataset = MNIST(root='/path/to/mnist/', train=False, transform=transform, download=True) loader = torch.utils.data.DataLoader( dataset=dataset, batch_size=self.batch_size, shuffle=False ) return loader # can also return multiple dataloaders def test_dataloader(self): return [loader_a, loader_b, ..., loader_n]

Note

If you don’t need a test dataset and a

test_step(), you don’t need to implement this method.Note

In the case where you return multiple test dataloaders, the

test_step()will have an argumentdataloader_idxwhich matches the order here.

- train_dataloader(num_images_per_class=- 1, add_normalize=False)[source]¶

Implement one or more PyTorch DataLoaders for training.

- Return type

- Returns

A collection of

torch.utils.data.DataLoaderspecifying training samples. In the case of multiple dataloaders, please see this section.

The dataloader you return will not be reloaded unless you set

reload_dataloaders_every_n_epochsto a positive integer.For data processing use the following pattern:

download in

prepare_data()process and split in

setup()

However, the above are only necessary for distributed processing.

Warning

do not assign state in prepare_data

fit()setup()

Note

Lightning adds the correct sampler for distributed and arbitrary hardware. There is no need to set it yourself.

Example:

# single dataloader def train_dataloader(self): transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (1.0,))]) dataset = MNIST(root='/path/to/mnist/', train=True, transform=transform, download=True) loader = torch.utils.data.DataLoader( dataset=dataset, batch_size=self.batch_size, shuffle=True ) return loader # multiple dataloaders, return as list def train_dataloader(self): mnist = MNIST(...) cifar = CIFAR(...) mnist_loader = torch.utils.data.DataLoader( dataset=mnist, batch_size=self.batch_size, shuffle=True ) cifar_loader = torch.utils.data.DataLoader( dataset=cifar, batch_size=self.batch_size, shuffle=True ) # each batch will be a list of tensors: [batch_mnist, batch_cifar] return [mnist_loader, cifar_loader] # multiple dataloader, return as dict def train_dataloader(self): mnist = MNIST(...) cifar = CIFAR(...) mnist_loader = torch.utils.data.DataLoader( dataset=mnist, batch_size=self.batch_size, shuffle=True ) cifar_loader = torch.utils.data.DataLoader( dataset=cifar, batch_size=self.batch_size, shuffle=True ) # each batch will be a dict of tensors: {'mnist': batch_mnist, 'cifar': batch_cifar} return {'mnist': mnist_loader, 'cifar': cifar_loader}

- val_dataloader(num_images_per_class=50, add_normalize=False)[source]¶

Implement one or multiple PyTorch DataLoaders for validation.

The dataloader you return will not be reloaded unless you set

reload_dataloaders_every_n_epochsto a positive integer.It’s recommended that all data downloads and preparation happen in

prepare_data().fit()validate()setup()

Note

Lightning adds the correct sampler for distributed and arbitrary hardware There is no need to set it yourself.

- Return type

- Returns

A

torch.utils.data.DataLoaderor a sequence of them specifying validation samples.

Examples:

def val_dataloader(self): transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (1.0,))]) dataset = MNIST(root='/path/to/mnist/', train=False, transform=transform, download=True) loader = torch.utils.data.DataLoader( dataset=dataset, batch_size=self.batch_size, shuffle=False ) return loader # can also return multiple dataloaders def val_dataloader(self): return [loader_a, loader_b, ..., loader_n]

Note

If you don’t need a validation dataset and a

validation_step(), you don’t need to implement this method.Note

In the case where you return multiple validation dataloaders, the

validation_step()will have an argumentdataloader_idxwhich matches the order here.

STL-10¶

- class pl_bolts.datamodules.stl10_datamodule.STL10DataModule(data_dir=None, unlabeled_val_split=5000, train_val_split=500, num_workers=0, batch_size=32, seed=42, shuffle=True, pin_memory=True, drop_last=False, *args, **kwargs)[source]¶

Bases:

pytorch_lightning.core.datamodule.LightningDataModuleWarning

The feature STL10DataModule is currently marked under review. The compatibility with other Lightning projects is not guaranteed and API may change at any time. The API and functionality may change without warning in future releases. More details: https://lightning-bolts.readthedocs.io/en/latest/stability.html

- Specs:

10 classes (1 per type)

Each image is (3 x 96 x 96)

Standard STL-10, train, val, test splits and transforms. STL-10 has support for doing validation splits on the labeled or unlabeled splits

Transforms:

transforms = transform_lib.Compose([ transform_lib.ToTensor(), transforms.Normalize( mean=(0.43, 0.42, 0.39), std=(0.27, 0.26, 0.27) ) ])

Example:

from pl_bolts.datamodules import STL10DataModule dm = STL10DataModule(PATH) model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

unlabeled_val_split¶ (

int) – how many images from the unlabeled training split to use for validationtrain_val_split¶ (

int) – how many images from the labeled training split to use for validationnum_workers¶ (

int) – how many workers to use for loading dataseed¶ (

int) – random seed to be used for train/val/test splitspin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

- train_dataloader()[source]¶

Loads the ‘unlabeled’ split minus a portion set aside for validation via unlabeled_val_split.

- Return type

- train_dataloader_mixed()[source]¶

Loads a portion of the ‘unlabeled’ training data and ‘train’ (labeled) data. both portions have a subset removed for validation via unlabeled_val_split and train_val_split

- Parameters

- Return type

- val_dataloader()[source]¶

Loads a portion of the ‘unlabeled’ training data set aside for validation.

The val dataset = (unlabeled - train_val_split)

- Parameters

- Return type

- val_dataloader_mixed()[source]¶

Loads a portion of the ‘unlabeled’ training data set aside for validation along with the portion of the ‘train’ dataset to be used for validation.

unlabeled_val = (unlabeled - train_val_split)

labeled_val = (train- train_val_split)

full_val = unlabeled_val + labeled_val

- Parameters

- Return type